Algorithms are playing an increasing role in the public sphere, whether it is to calculate the amount of social aid, to allocate a place in a daycare center or to evaluate the risks of default of companies.

Numerous studies and reports have highlighted the diversity of issues related to the deployment of these systems, which are likely to influence or even automate decisions that can negatively affect individuals, such as in terms of autonomy or equity.

"From the selection of informational content to the prediction of crime recidivism, the use of algorithmic systems is embedded in environments and contexts that condition their impacts at both the individual and societal levels.Given this potential "force of impact", the call to evaluate the effects of these algorithmic systems has become increasingly urgent.This interest in impact issues has become particularly clear in the context of the use of algorithmic systems by the administration to make administrative decisions likely to affect individuals or groups.

France has established a legal framework to promote greater transparency of these systems and the right of individuals to be informed. Governments and civil society actors have developed algorithmic impact assessment (AIA) tools.

"What do these assessments correspond to, what do they contain, how are they implemented?"These questions are addressed in a study conducted by Elisabeth Lehagre, at the request of Etalab, which is carrying out several actions in this direction, within the interministerial directorate for digital technology (DINUM).

Evaluate: what? how? why?

The study presents a critical look at 7 tools developed around the world (United Kingdom, Canada, New Zealand, United States and European Union).The first observation is the diversity of the tools examined: there is no standard model. Algorithmic impact assessments come in various forms and contents.

- The AI Now Institute proposes a framework of good practices that includes five general recommendations (including the establishment of procedures for external verification and monitoring of impacts),

- The High-Level Expert Group for Trusted AI, convened by the European Commission, proposes a list of questions that serve as a basis for system self-assessment,

- The New Zealand government includes a risk matrix in its algorithm charter, which quantifies the likelihood of adverse effects on people (unlikely, occasional, likely) relative to the number of people potentially impacted (low, moderate, high).

- The tool proposed by the Government of Canada (Treasury Board) stands out because it automatically calculates the level of risk of a system by answering a few questions. Depending on the level of impact thus calculated, specific recommendations are made.

Self-assessment tools that still need to be proven

The author of the study also focused on understanding how these tools are implemented in practice.Two questions emerge: the understanding of these tools by the actors who are supposed to use them, and the limits of self-evaluation. "The author of the study asks, "Is impact evaluation sufficiently comprehensible to be usable? The difficulties of understanding can be multiple: difficulty in precisely defining the perimeter and the object of the evaluation ("artificial intelligence systems", "algorithms", "automated decision systems"?) but also the fields of impact ("well-being", "rights and freedoms of individuals"?).

The second question concerns self-arbitration. Indeed, the vast majority of these tools were designed as internal self-assessment tools, where the people carrying out the assessment exercise are also those in charge of designing and deploying these tools. " In order to avoid the risks associated with self-arbitration (where the same actors are potentially judge and jury), several recommendations were developed, including the importance of making algorithmic impact assessments public and transparent. The possibility of involving third parties (internal peers or external experts) in the evaluation or monitoring of systems is another avenue being explored.

Summary of the study

- Introduction

- What is an algorithmic impact assessment?

- A generic definition for a concept with multiple dimensions

- A self-assessment tool in various forms: from a good practice guide to an automated risk calculation tool

- What's in an algorithmic impact assessment?

- Identifying the purpose and effects to be evaluated: What is the focus of the evaluation?

- Impact assessment methods: how is the assessment carried out?

- The framework for algorithmic impact assessment

- Is algorithmic impact assessment "usable" in practice

- Comprehensibility issue: is the algorithmic impact assessment comprehensible enough to be usable?

- Question of self-adjudication: does autonomy in assessing algorithmic impact undermine its usability?

- Conclusion

Références :

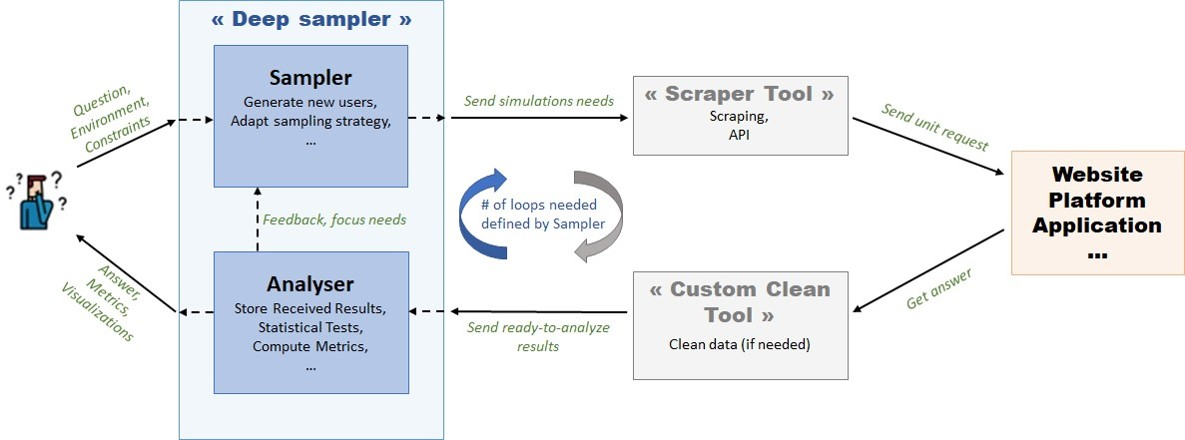

PEReN: a center of expertise within the State to analyze the functioning of digital platforms

"Today, understanding the universe of data is an essential prerequisite for analyzing the functioning of digital platforms, and implementing or adapting their regulation."It is in this context that the Digital regulation expertise centre (PEReN) was created, in 2020, "in order to constitute a center of expertise in data science that can be mobilized by government departments and independent administrative authorities wishing to do so".

PEReN is not a regulatory body: it provides support to government departments with regulatory competencies for digital platforms, and is involved in exploratory or scientific data science research projects.

Référence :

LaborIA: a resource and experimentation center on artificial intelligence in the workplace

According to the OECD, 32% of jobs will be profoundly transformed by automation over the next 20 years.To support these transformations and prepare companies and employees for them, the Ministry of Labor, Employment and Integration and INRIA have joined forces to create a a resource and experimentation center for artificial intelligence in the workplace.

Called "LaborIA", this resource center will allow a better understanding of artificial intelligence and its effects on work, employment, skills and social dialogue, with the aim of changing business practices and public action.

Référence :

Sources

- 1. Elisabeth Lehagre : Algorithmic impact assessment: a tool that still needs to prove itself, 2021

- 2. Assessing the impact of algorithms: publication of an international study commissioned by Etalab

- 3. Pole of Expertise for Digital Regulation (PEReN)

- 4. LaborIA - Creation of a resource and experimentation center on artificial intelligence in the professional environment